Starting with Docker

Writing Down the very first things that I learn about docker

What is Docker?

Docker is a DevOps tool to containerize the services and processes on a …Wait...Wait...Wait!! What is DevOps? What is Containerization? What kind of services and processes I can “containerize”, whatever this word means?

I’ll start from the very beginning. “Meri Ek taang nakli hai, Mai hockey ka bohot bada khiladi tha…”, Okay, let me get little serious here. So,

DevOps can be understood as an idea that combines Developer teams and IT operations teams. Putting this in a more easy way, Developers are the people who write code, build the application and IT Operations are the practices which concern with the delivery of the application, resources allocation for the application, Data backups, Quality check, Monitoring, etc. So A DevOps engineer sort of creates a bridge between both.

A container is like a metaphor for the processes which run in an isolated way on an operating system. They have their own networking, own CPU, and memory allocation. You might be thinking, why not just use a virtual machine, haan? Well, A virtual machine is a separate OS, heavily loaded with many other processes, that you may never need, instead of virtualizing a complete operating system to run a single service, you can virtualize a service. To put it more correctly, you can create a lightweight virtual environment for a single service.

These services can be your hostings, Nginx servers, NodeJS, or angular applications.

And Docker helps you do this.

The name Docker comes from the word Dock. A Dock is used for the loading and unloading of cargo on ships. A simple analogy can be defined here, cargo can be containers, and a ship can be our operating system. All the goods in cargo are in isolation with goods of another cargo and ship itself. Similarly in Docker, the process of one container is isolated from the process of another container and the operating system itself.

Now diving deeper into it…

Docker uses Linux Containers(LXC) and Linux kernel. As the docker container does not have an operating system of its own, it relies on the Host operating system. This host os provides the images its structure, A container created on Linux can be run on any Linux distro, but can not run on windows and the same goes for an image created on Windows.

Docker only extends LXC’s capabilities. Talking about LXC, it uses Linux's cgroups, which allow the host kernel to partition the resource usage (CPU, memory, disk I/O, network, etc.) into isolation levels called namespaces.

How to create a Docker container?

To create a docker container, we need to first create an archive for our project containing all files and dependencies. This archive is called an image (.img). Once a Docker image is created You can’t change or modify it.

A wide range of container images can also be found at DockerHub the public repository of docker, that lets you share your images, best thing about this is you can create your own Images and push them in your private repository.

This docker image is then used to create containers. The same image can be used to create more than one container by using Docker-CLI. What is this Docker-CLI?

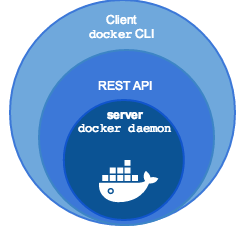

Now, Looking at the Architecture of Docker,

- The Docker daemon listens for Docker API requests and manages all Docker objects like images, containers, networks, and volumes. This is the main docker process that lets other processes and containers run over it. If this fails all containers running over it will fail.

- The Docker Daemon itself exposes REST APIs. Different tools can use these APIs to summon the daemon. You can build an application to use these APIs as well.

- Docker-CLI is a command-line tool that lets you talk to the Docker daemon via REST APIs.

Networking in Docker

- Host networks — Docker container would be using the networks of the host, it won't be isolated from a networking point of view, but other aspects of the container would still be isolated.

- Bridge networks — Bridge networks allow your applications to run in standalone containers but they can communicate outside or within depending on port mapping.

- Overlay networks —Overlay networks connect multiple Docker daemons together and enable swarm services to communicate with each other. Swarm services come into play when you’re using more than one operating system.

- Macvlan networks — This allows you to assign a MAC address to a container, making it appear as a physical device on your network.

- None — No networking is provided here, there is no connection within or outside docker.

Why will I be using docker?

- As a developer I can easily package up my project with all its dependencies and files, create a docker image for it, and I can be assured that It’ll run on any Linux distribution.

- it is easily deployable as a docker image created on a Linux can run on any Linux distro.

- I can allocate the required CPU and memory to a docker container, which will help me utilize the full potential of my resources.

- I can run multiple containers with a single image, so I can write a script for if one container fails it will automatically load another one, isn’t it cool?

- I can simply share a docker image with the testing team, to perform tests, they can create multiple container instances with a single image, and perform all sorts of tests.